Study checklist for a budding data scientist

I recently met someone who wants to learn data science- just like I did years ago! This blog post is for you, dear future data scientist.

When I look back at my data science career, I had no problem finding all the learning resources I might need freely accessible on the internet. The problem was actually the opposite - every blog, book, tutorial or tool tried to look like the most important thing in the universe that you definitely need to know. The hardest thing was to correctly prioritize all the topics.

So to help you, I came up with this list of topics a budding data scientist should know. It is not the only possible learning path or the only flavour of data science (it is a very vaguely defined job), but it is a good compromise between being exhaustive and concise. Use it as a springboard, to guide you before you learn enough to guide yourself.

Legend

- Red - Know this well, it will be your bread and butter.

- Green - Know this well enough so that at worst you need to look up some specifics.

- Blue - Know that this exists and what it is, you will learn the details later as you need them.

Basic tools

-

Visual Studio Code - I recommend this code editor (more specifically IDE - Integrated Development Environment - used to edit code, run it, and other useful things). IDEs are usually packed with features, but you don’t need to learn how to use all of them, just learn the basics for now. There are also many good choices other than VS Code, e.g. Spyder. Try a few, pick one and stick with it.

-

Jupyter Notebook - This is a code format and IDE useful for interactive data exploration and visualization. It’s compatible with both VS Code and Google Collab.

-

Basic work with Linux terminal (or Windows command line) - Learn how to change directory, list its contents, run a command, display help for the command.

- Git

- GitHub - or GitLab, Gitea or similar Git hosting service.

Programming

- Python

- SQL

Data formats

- CSV - Basic format for tabular data, compatible with Excel.

- JSON - Widespread format, can handle non-tabular data. A great tool for JSON exploration is jq.

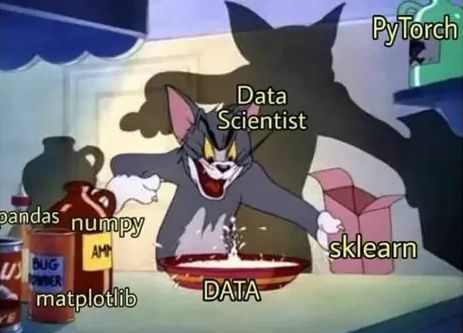

Python packages and tools

- Pandas - General, high level work with tables, Python equivalent of Excel. Learn to load data, transform it and select some parts of it, learn the rest as you go.

- Matplotlib - Most widespread data plotting package, used also by Pandas, Seaborn and Scikit-learn for their plotting. Learn how to do basic plots, formatting (set title, rename axis, change range) and how to save the plot to file.

- Seaborn - Very useful extension of matplotlib capabilities, it is a good idea to get used to it for basic plots instead of matplotlib.

- Numpy - Matrix multiplication and other linear algebra, anything to do with numerical arrays.

- Scikit-learn - A huge collection of models and tools for model evaluation, from basic to advanced. Learn about evaluation tools, what the model interface looks like and treat the list of available models as a learning checklist for further study.

- Scipy - Advanced mathematics, statistics and optimization.

- Pytorch and/or Tensorflow - Python packages for neural networks.

- Pip - Package manager, you will need it to install all these packages anyway.

- Conda - Package manager and virtual environment.

Math

- Linear algebra:

- Vectors and their dot product

- Matrix multiplication

- Geometric interpretation

- Statistics:

- Probability

- Probability distribution and difference between probability and probability density

- Normal (a.k.a gaussian) distribution

- Categorical distribution

- Binomial distribution

- Poisson distribution

- Correlation and rank correlation

- Confidence interval

- Concept of statistical test

- P-value

- P-hacking (as something to avoid)

- Conditional probability

- Cognitive biases and statistical fallacies:

- Simpson’s paradox

- Selection bias

- Survivorship bias

- Confounding variable and spurious correlation

- Unintuitive properties of high dimensional spaces

- Introduction to Bayesian statistics:

- Bayes rule

- Prior and posterior probability distributions

- Difference between maximum likelihood estimate and maximum posterior probability estimate

Visualization and descriptive statistics

- Lineplot

- Scatterplot

- Histogram

- Boxplot

- KDE (kernel density estimate, a method to smooth out histograms)

- Quantiles

- Mean, mode, median, variance and standard deviation

Modeling

- Difference between regression and classification

- Linear regression and logistic regression

- Overfitting and strategies against it:

- Train/test/validation split

- Cross-validation

- Regularization

- Data augmentation

- Data normalization - which models need it and which don’t

- Confusion matrix - recall (sensitivity), precision, specificity, accuracy and tradeoffs between them

- ROC curve - ROC AUC score (perhaps also precision-recall curve)

- Impact of data imbalance on training and model evaluation metrics

- Decision boundary - linear vs. non linear models, concept of linearly separable data

- Feature engineering, especially for turning linear models non-linear, also see “kernel trick”

- Decision tree

- Neural networks for classification

- Bias Vs variance tradeoff

- Ensemble models

- Clustering

- Topic modeling

- Dimensionality reduction

- Autoencoders and embeddings

Ethics

- Biased data leads to biased models.

- Misinterpretation of model output meaning - the user doesn’t understand the math as you do.

- Overestimation of model output reliability - the user doesn’t understand the limitations of the model as you do.

- Unintended use - users do whatever they want, sometimes it is great, but sometimes it is stupid and sometimes it is evil.

- Automated decision making - don’t do it, it is better to assist human decision makers, but keep in mind previous bullet points.

- When not to do machine learning - good UI and good math are often a better solution than a good model.

What does the usual data science workflow look like?

In addition to thinking about business needs, hypothesis forming and testing, communication and other tasks, the “manual” part of data-science usually consists of these steps:

- Load data.

- Explore it - what does it look like, how much is there, what data is missing, is it biased in some way? Is it representative of the real world? What data do you actually need? Are there outliers?

- Use descriptive statistics and visualization to summarize data properties.

- Clean the data - remove duplicates and deal with missing data and outliers.

- Model the data - understand what answer you want and what type of model can give it to you.

- Evaluate your model - does it do what you want? If not, return to previous step.

- Visualize and clearly communicate the conclusions of your modeling - what do they mean? How certain are you?

- If needed, deploy the model for repeated use.

Conclusion

Good luck!